Build. Train. Deploy

Simulate the Real World, At Scale.

AuraSIM is a generative simulation platform that lets you generate photorealistic, sensor accurate simulation with environments using text. Train robots in hundreds of scenarios before ever deploying them on site.

AuraSIM works with Robotics AI Companies, Indian Government Agencies &Enterprises

Train robots like they’re already in the field

AuraSIM replicate real world complexity, so you can develop, test, and deploy robotics system with confidence and speed.

Large World Modeling

Captures spatial, physical, and contextual dynamics of complex environments.

Sensor & Actuator Simulation

Emulates real world inputs (e.g. cameras, LiDAR, force sensors) and hardware behavior with precision.

Autonomy Testing

Stress test navigation, manipulation, and edge case logic in synthetic but realistic scenarios.

.webp)

Cloud Native Scalability

Train models, run multi agent scenarios, and iterate faster entirely in the cloud.

Real to Sim Transfer

Bridging the gap between synthetic training and real world performance through adaptive simulation.

Design, Develop and Deploy full-stack Robot Systems

From idea to deployment, AuraSIM lets you simulate environments, train autonomy, and validate robotic systems virtually with unprecedented speed and precision.

Your Simulation Workflow, Accelerated

AuraSIM connects your robotics stack with a generative simulation engine that accelerates development across perception, planning, and control.

Design your virtual factory or any world

Digitally recreate your environment from warehouses to assembly lines using natural language or floorplans, ready for instant simulation.

Develop and train autonomy for your robots

Simulate diverse real-world scenarios and edge cases to train, test, and validate navigation, manipulation, and safety systems.

Validate your solution

Iterate faster with sensor-accurate feedback and cloud-based validation workflows. Move from sim to site in a fraction of the time.

Deploy with Confidence

Seamlessly transfer learnings from simulation to real-world systems. Reduce on-site calibration and deployment risks with high-fidelity modeling.

How It Works

Step 1:

Describe Your Environment

Use natural language or floorplans to define your environment: warehouses, labs, factories, or custom terrains.

Step 2:

Generate Simulation with Sensor Fidelity

AuraSIM transforms your prompt into a photorealistic, physics-accurate environment with simulated sensors like LiDAR, cameras, GPS, and IMUs.

Step 3:

Plug In Your Robot Code

Integrate your autonomy stack SLAM, motion planning, perception via ROS or your preferred middleware. Run simulations as if on hardware.

Step 4:

Train and Iterate in the Loop

Simulate edge cases, modify parameters on the fly, and train AI agents across hundreds of scenes with programmatic control.

Step 5:

Deploy with Confidence

Export trained models and validated code for deployment on real robots with reduced site calibration, safer rollouts, and faster time to field.

AuraSIM Features

We train industrial robots to optimize performance, enhance precision, increase adaptability, and enable intelligent automation across modern manufacturing environments.

Text-to-Simulation Engine

Generate complex 3D environments from simple descriptions no manual modeling needed.

Sensor Simulation Suite

Includes LiDAR, stereo cameras, depth sensors, IMU, GPS all with configurable noise, latency, and distortion models.

Robot Code Integration

Compatible with ROS1/ROS2, custom SDKs, and Python APIs. Upload and test your real robot logic inside the sim.

Cloud Native & Scalable

Run thousands of parallel simulations on the cloud, scale your training pipeline, and access via web or API.

Closed Loop Training Support

Loop in RL agents, perception modules, or planning stacks. Perfect for data generation, failure analysis, and AI validation.

Analytics & Evaluation Tools

Visualize trajectories, sensor output, collision events, and performance KPIs in real time or batch mode.

AuraML Insight Partner Program

The Insight Partner Program is an exclusive initiative for forward thinking robotics teams, researchers, and integrators who want to shape the future of generative simulation.

By partnering early with AuraML, you'll get access to cutting-edge features, direct influence on roadmap priorities, and dedicated support to accelerate your robotics development.

What You Get

Early Access to AuraSIM: Get hands on with unreleased capabilities and influence how new features evolve.

Dedicated Support & Onboarding: Work closely with our engineering team to integrate AuraSIM into your existing stack.

Joint R&D Opportunities: Co-develop custom scenarios, sensors, or interfaces tailored to your domain.

Priority in Feature Requests: Your product needs help shape our roadmap. Insight partners get fast tracked feedback loops.

Showcase & Visibility: Be featured as a launch partner in case studies, demos, and global conferences.

Who Should Apply

Robotics product companies building autonomous solutions

System integrators looking to simulate custom deployments

Research labs and universities advancing robotics AI

Industrial teams needing fast, realistic simulation for training & testing

Simulation Meets Reality

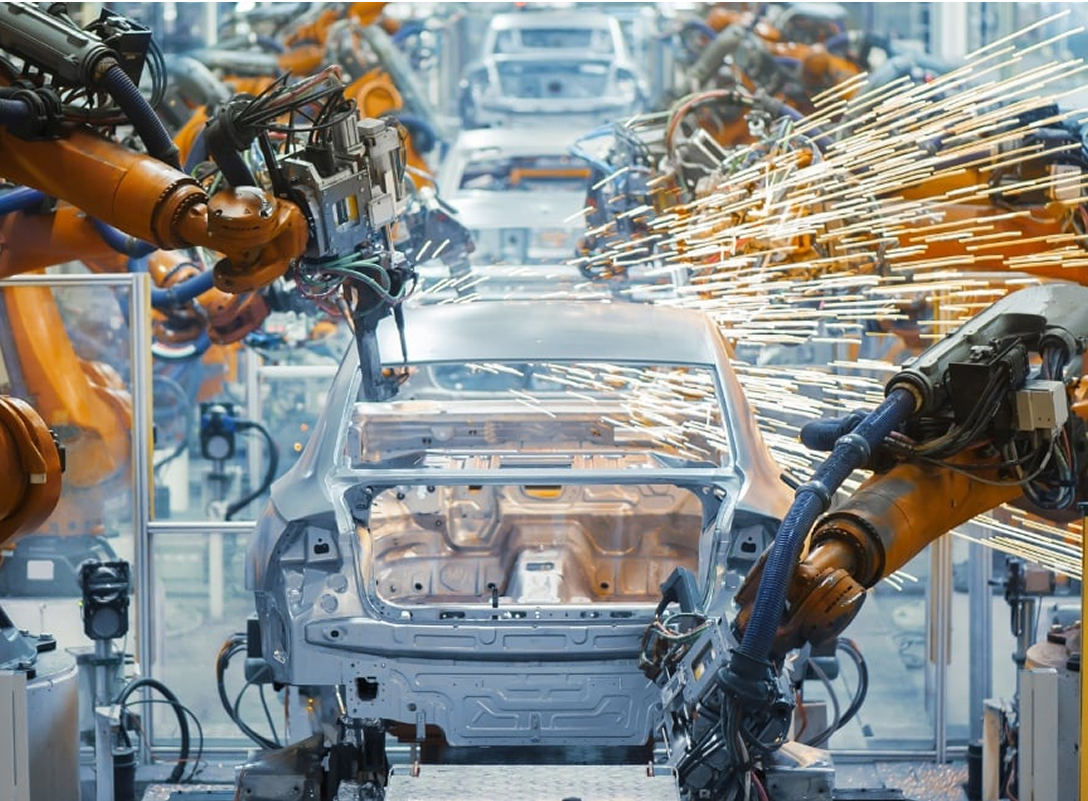

Industrial Automation

Industrial Automation

Virtual Factories. Real Automation

Robotic Arms (welding, assembly)

SCARA & Delta Robots (pick-and-place)

CNC-Integrated Bots

Mobile Manipulators

Validate assembly workflows

Train in precise, multi step operations

Run HRI and fault-handling scenarios

Logistics & Warehousing

Logistics & Warehousing

Train robots to move goods like clockwork

AMRs & AGVs

Sorting Robots

Pick and Place Vision Bots

Palletizers

Optimize fleet coordination

Test last meter navigation

Run HRI and fault handling scenarios

.jpg)

Defense & Aerospace

Defense & Aerospace

De-risking high-stakes autonomy

UGVs and UAVs

Recon Drones

Surveillance Crawlers

Tethered Inspection Units

Test in tactical or unstructured terrain

Validate mission logic

Train multi agent recon and AI perception

Agriculture Robotics

Agriculture Robotics

Smarter fields. Adaptive farming

Harvesting Robots

Autonomous Tractors

Drones for crop monitoring

Weeding/irrigation platforms

Simulate terrain and crop variability

Train plant health monitoring AI

Precision farming scenario testing

Energy & Utilities

Energy & Utilities

Robots that inspect, climb, and dive

Pipeline Crawlers

Substation Maintenance Bots

Wind Turbine Inspectors

Underwater ROVs

Wall Climbing Robots

Hazard modeling (heat, wind, pressure)

Validate remote maintenance operations

Train vision and control in extreme environments

AuraSIM Technical FAQ

AuraSIM supports a wide range of robots including industrial arms, AMRs, drones, quadrupeds, and humanoids. You can import URDF/XACRO files or use our SDK for custom platforms.

You provide a prompt (e.g., "a cluttered warehouse with ramps, pallets, and two forklifts"), and AuraSIM’s large world model auto-generates a high-fidelity simulation with structured layouts, objects, and physics no manual 3D modeling required.

Supported sensors include:

- LiDAR (2D/3D)

- RGB + Depth Cameras (mono, stereo)

- IMU

- GPS

- Force/Torque sensors

All with configurable noise, latency, distortion, and frame sync.

Yes. AuraSIM supports ROS1, ROS2, Python APIs, and custom interfaces. You can run the same autonomy stack used in the real robot perfect for testing SLAM, planning, and control.

Yes. AuraSIM is built with closed-loop training in mind. You can reset environments, inject randomness, control frame rate, and log sensor+state data ideal for RL, imitation learning, and synthetic dataset generation.

Yes. AuraSIM supports synchronized multi-agent simulation with collision detection, shared spaces, and task coordination logic.

AuraSIM is designed for cloud-native operation with API orchestration and scale out training. Local/offline deployment is available for enterprise customers or air-gapped systems.

Yes. You can import custom 3D assets in FBX, OBJ, USD formats and use them alongside generative environments. CAD layouts and floorplans are also supported.

AuraSIM combines industry standard physics libraries with AuraML’s custom extensions for contact modeling, friction tuning, and deformable surface simulation (beta).

Yes. We use AES encrypted storage, signed API tokens, and isolated runtime environments. Enterprise customers can request custom security protocols and on-prem deployment.

AuraSIM is offered under a tiered license model:

- Developer Plan – Pay as you go access, limited concurrency

- Team Plan – Priority compute, advanced API, multi user support

- Enterprise Plan – Custom SLAs, on-prem deployment, dedicated support

Contact us for a quote or pilot access.

We offer:

- Slack-based dev support

- Dedicated solution engineers for enterprise

- Weekly check-ins during onboarding

- Documentation & SDK updates

Priority support is available for Insight Partners.

Upcoming milestones:

- Domain-randomized simulation for vision training

- Physics-based manipulation (grasping, deformation)

- Scene memory & version control

- Native support for Isaac Sim and Mujoco backend bridges

Join our Insight Partner Program to influence the roadmap directly.

Still have questions?

Book A Braindate

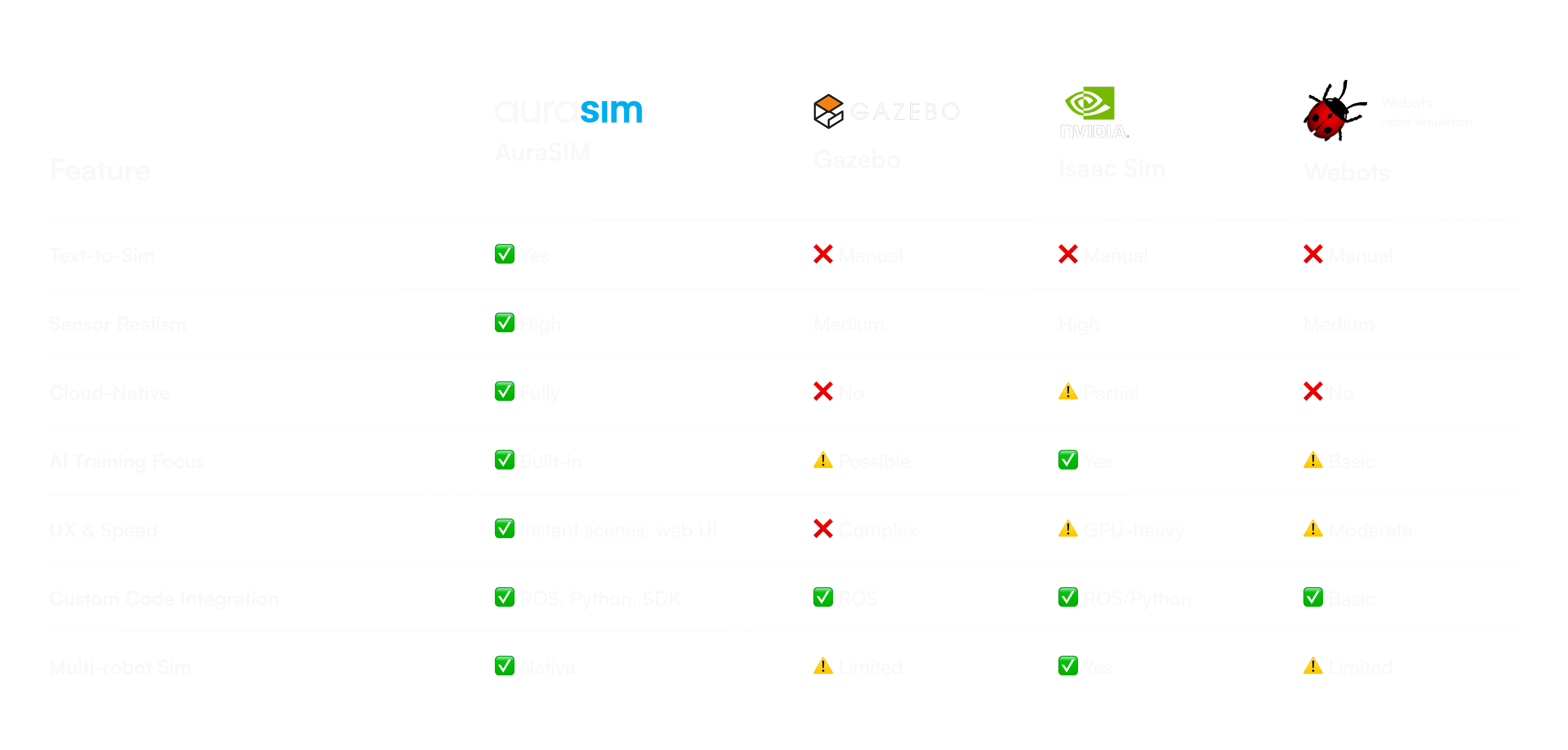

How is AuraSIM different from Gazebo, Isaac Sim, or Webots?

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

AuraSIM is purpose-built for fast, scalable, and generative simulation. Unlike legacy tools, it focuses on automation, cloud-first workflows, and developer speed.

Book A Braindate

Pricing plan

AuraSIM is offered under flexible subscription plans designed for teams of all sizes:

(Tailored for large teams, OEMs, and R&D labs)

(Tailored for large teams, OEMs, and R&D labs)

Contact us

.png)